This article has originally appeared on Hacker News.

Most People Don’t Know What Vibe Coding Is

Vibe coding is not what people think it is.

A Y Combinator video compares the state of vibe coding now to the first cars that looked like horse-drawn carriages. That first car design had too many unnecessary limitations as a result. Once people figured out they could do better, real cars appeared. Like that, vibe coding right now is in its initial sub-optimal phase.

The analogy is good but incomplete. Vibe coding is in its early stages. It is an unclear vision, a feeling about what an app should look like. Computer code doesn’t work like that: it needs clear, concrete 0s and 1s. Programmers are forced to translate vague intentions into exact instructions, often doing a lot of work to clarify the original vibe into concrete rules.

There is a Vast Difference Between Traditional Coding and Vibe Coding

Vibe coding delivers code without asking for requirements. It’s an ongoing experiment. When someone succeeds with vibe coding, they rave about it. When vibe coding fails, few will share their experience. We’re dealing with Survivorship Bias here: those who make it tell the story. And there are far fewer stories from those who don’t make it.

This is similar to the missing stories of dolphins that forced people to drown. There is a reason you don’t hear about them. People, in general, are less likely to brag about their failures. As a result, we get a distorted picture of how effective vibe coding is: more myth than method, built on selectively shared success stories.

When you hear success stories about vibe coding, you think you can repeat it. You can’t. Those who succeeded most likely had different tasks, used different prompts, and thought differently. AI models hallucinate. Different prompts deliver vastly differing code, while traditional coding follows more or less the same standards.

The Key Principles of Traditional Coding

Vibe coding follows a process without consistency and repeatability, core qualities of any engineering discipline. Without those, success becomes a lucky exception.

Traditional coding was built to avoid that kind of guesswork. It relies on precise algorithms, follows exact patterns, and uses standard structures that make code predictable and maintainable. Experts like Martin Fowler have spent decades formalizing these standards so developers don’t rely on gut instinct. They build on shared logic that scales.

I get it; it's an appealing idea for a non-technical person to skip communicating with a boring, nitty-gritty geek and get the code made by yourself. My take on this is, why don’t you apply the same logic to healthcare? Why not ask AI for advice on do-it-yourself heart surgery?

Where Vibe Coding Works Well

Don’t get me wrong, there are great applications for vibe coding. Making an app just for fun is one such use case. When security and data privacy are unnecessary, vibe coding is excellent! Not so when you want to build an app for a health insurance or medical provider. Vibe coding for a flight control system, NASA rocket ship propulsion control system, anyone?

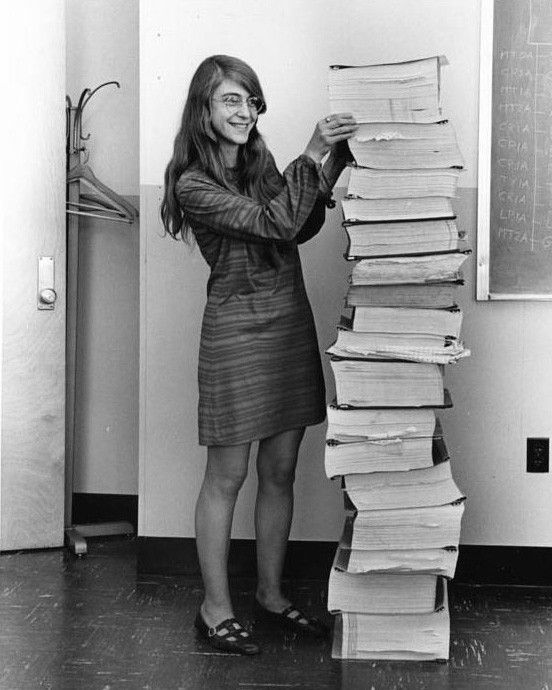

Margaret Hamilton was a pioneering NASA software engineer, here pictured with the code she wrote for the Apollo program that landed humans on the Moon.

Would you vibe code that? Maybe you’d risk it, but that’s too much uncertainty for most people. And the problem isn’t that we’re overly cautious or scared of new tech. It’s that we already know these systems sometimes go off the rails, and when they do, it’s not a glitch. It’s real consequences for real people.

How AI Works Defines Where Vibe Coding Fails

The way AI works is that it continuously forecasts the next most likely token, regardless of whether the method is applied to a human language or code.

To build an AI code generation engine, you take all the existing code in that language that you can get your hands on. Most of the publicly available code in the world is written by Junior-level developers. Senior developers and corporations treat their code as intellectual property and don’t share most of their code so freely. This is why most good working code is in the private domain and not open source.

Let’s take Python. Like a human language, it has a specific standard sentence structure with its own must-have tokens. AI uses forecasting to build code by continuously selecting the next most likely token to arrive at functional code. If you’re interested, Microsoft has a lot of technical breakdowns on the details of how forecasting works.

The open-source code is primarily experimental, and only a tiny fraction of that is corporate code that companies release into the public domain, like Lama and Red Hat. Another aspect of open code is that it can be partially open, so it isn’t likely to include enterprise-level functions and examples like advanced access rules, permission systems, authentication, and authorization. Because public code is the primary training source, AI learns from examples that fall short of enterprise-level quality.

But simplifying, predicting what “usually” comes next isn’t the same as understanding what’s true. When context shifts or edge cases appear, this kind of pattern-matching breaks down. And in code, completing without understanding can quietly introduce bugs that are hard to catch and costly to fix.

Who has Access to High-Quality AI-Generated Code (and Why)

People using AI want to generate enterprise-quality code, but they can’t. Huge companies like Amazon, Oracle, and Microsoft have massive volumes of trustworthy code that is verified, standardized, and quality-checked by generations of software engineers. AI models trained on code like that would be much more reliable, but the problem is intellectual property rights. Because their code is the corporations' intellectual property and the source of their market valuations, any AI models trained on it can’t and won’t be public.

This is why you may have heard about a minor scandal OpenAI got into when using private data off of GitHub, a case called “Zombie Data.” AI models that use private data for training are usually used by those companies internally. Inside those companies, they generate high-quality vibe code.

Companies with large volumes or proprietary code can use vibe coding internally with great results. The problem they solve is generating standardized code, not generating just any code fast. With models trained on massive volumes of high-quality code they own they can ensure the code they produce maintains the style, the syntax, and the commenting conventions, generating code that’s similar to the code 1,000s of engineers have written already. However, companies like that are unlikely to use unverified open-source code from GitHub to train their AI models.

Great Use Cases for Vibe Coding

Vibe coding is an excellent tool for creating simple apps and websites faster, cheaper, and with more creativity than before.

Many services like Cursor, Bolt, Replit, Lovable, and various AI chatbots like Claude already help anyone develop sites and apps, so long as limited flexibility is not an issue. Quickly and cheaply generating apps and sites with vibe coding is great for creative agencies or entrepreneurs with an innovative vision, so long as no long-term support is needed. Ideas that grow into successful businesses can hire software engineers to create a new codebase that can scale, be secure, and remain maintainable. But that handoff isn't always smooth. Replacing AI-generated code often means starting over because the foundation isn't built for extensibility. What starts as a shortcut can become a constraint once real growth begins.

Companies can also use vibe coding, but they must have the code and prepare it for model training by having extensive documentation and code comments describing how functions, classes, and modules work. Otherwise, the model has no reliable reference point — it starts generating based on patterns. And that's where things fall apart fast: vague outputs, unexpected behaviors, and hours lost trying to reverse-engineer your system.

The Way AI Model Training is Designed Causes it to Use Outdated Code

One of the unspoken issues about AI code generators is the delay in catching up with the latest code updates.

Y Combinator and Medium posts have a number of discussions on version control problems for AI-generated code.

AI models train on existing code, so much of what they learn from is already behind current standards. Since most of the available training data is obsolete, the model assigns more weight to older patterns in its predictions. As a result, it tends to generate code using deprecated syntax or practices.

Vibe Coders’ Blind Spot

Vibe coders don’t realize problems caused by different code versions, but it inevitably becomes a barrier. They’re not engineers, so they cannot tell whether the code they generate is outdated or valid. As a result, they ship things that break silently, and they have no idea where or why they fail.

Every new version of a programming language brings changes, and AI models aren’t version-aware. Google’s Vertex AI offers advice on overcoming this problem, but it’s probably not a gude vibe coders will read.

Most version updates fall into three categories:

- Deprecated functions that stop working

- Patches to existing functions that require new syntax, including security updates

- New implementations that the AI model will ignore

Two Ways to Overcome AI Model Training Shortcomings (and Two Problems with the Solutions)

Vibe coding models often produce old code, and that’s a recipe for security bugs, especially if the latest code version’s critical security patches were missed. Consequently, you get failed supply chain security checks, policy violations, and blocked releases.

In theory, AI models could avoid this by learning from user feedback, choosing one code version over another. Stackoverflow and Quora are full of discussions on this topic.

But there are two issues here. First, vibe coders don’t have the expertise to evaluate which version is correct, yet the model still relies on them to steer it. Second, new language versions lack real-world code examples, so older patterns dominate and skew the model’s predictions.

Until those two problems are solved, vibe coding will stay unreliable, especially when security matters.