GPU VM Platform As a Service

On-demand user convenience without owning hardware or running ops

On-demand user convenience without owning hardware or running ops

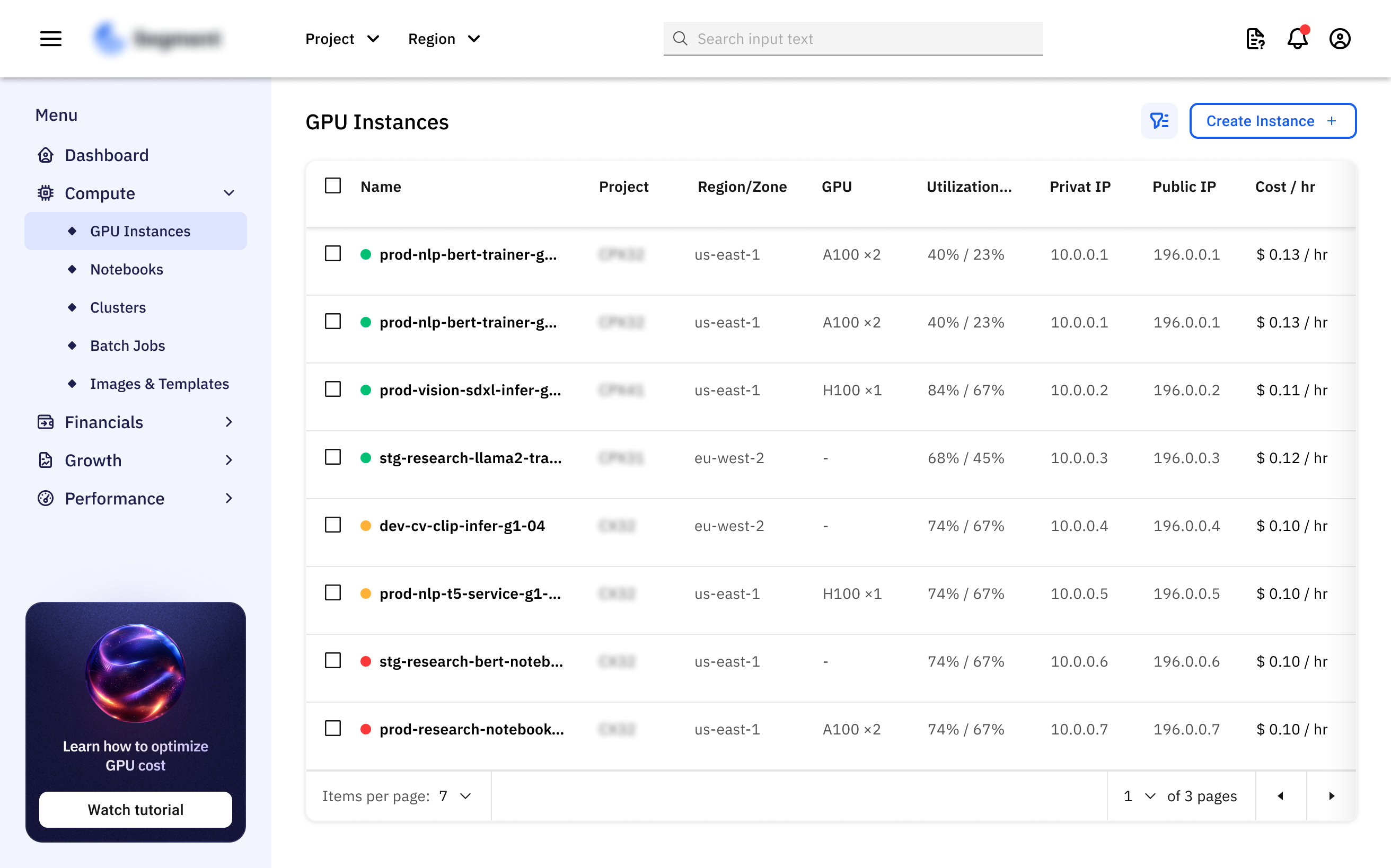

Our client is a Latin American cloud service provider who is pivoting into an AI service provider with managed, on-demand GPU compute with cloud-like hosting.

They wanted a cloud-style platform where users launch NVIDIA-powered GPU VMs or workspaces on demand via a web portal or Terraform.

1. Describe Your Challenge & Vision

2. Share Your Business Goals

3. Get Your Kubernetes Roadmap